What are the options for data center interconnection?

- Categories:Industry News

- Time of issue:2022-07-26

(Summary description) To better meet the needs of cloud data centers, many data center network solutions emerge. For example, Huawei DATA center switches (CloudEngine series), Huawei DATA center controllers (iMaster NCE-Fabric), and Intelligent Network Analysis Platform (iMaster NCE-FabricInsight) provide the following two recommended data center interconnection solutions. End-to-end VXLAN scheme Data center interconnection based on an end-to-end VXLAN tunnel refers to the following: The computing and network of multiple DCS are unified resource pools and centrally managed by one Cloud platform and one iMaster NCE-Fabric. Multiple DCS are unified end-to-end VXLAN domains, and users' Virtual Private Cloud (VPC). Virtual private cloud (VSP) and subnet can be deployed across DCS to directly realize service communication. Its deployment architecture is 25G SFP28 Duplex as shown in the following figure. Schematic diagram of the end-to-end VXLAN solution In this solution, an end-to-end VXLAN tunnel must be established between multiple data centers. As shown in the following figure, Underlay routes must be interconnected between data centers. Secondly, in Overlay network layer, EVPN should be deployed between Leaf devices in two data centers. In this way, Leaf devices at both ends discover each other through EVPN and transfer VXLAN encapsulation information to each other through EVPN routes, triggering the establishment of end-to-end VXLAN tunnels. The end-to-end VXLAN tunnel diagram This scheme is mainly used to match the Muti-POD scenario. Point of Delivery (PoD) refers to a group of relatively independent physical resources. Multi-pod refers to an iMaster NCE-Fabric that manages multiple PODS. Multiple pods form an end-to-end VXLAN domain. This scenario applies to the interconnection of small data centers that are close to the same city. Segment VXLAN scheme Data center interconnection based on Segment VXLAN tunnel refers to: In the multi-DC scenario, the computing and network of each DC are independent resource pools, which are independently managed by their respective cloud platforms and iMaster NCE-Fabric. Each DC is an independent VXLAN domain. A DCI VXLAN domain is required for communication between DCS. In addition, users' VPCS and subnets are deployed in their own data centers. Therefore, service communication between different data centers needs to be orchestrated by upper-layer cloud management platforms. The following figure shows the deployment architecture. Segment VXLAN scheme architecture diagram In this solution, VXLAN tunnels must be established within and between data centers. As shown in the following figure, Underlay routes must be interconnected between data centers. Second, at the Overlay network level, EVPNs are deployed between Leaf devices inside the data center and DCI gateways, as well as between DCI gateways in different data centers. In this way, related devices discover each other through EVPN protocol and transmit VXLAN encapsulation information to each other through EVPN route, thereby triggering the establishment of Segment VXLAN tunnels. Segment VXLAN scheme architecture diagram This solution is mainly used to match multi-site scenarios, which are applicable to the interconnection of multiple data centers located in different regions, or the interconnection of multiple data centers that are too far apart to be managed by the same iMaster NCE-Fabric.

What are the options for data center interconnection?

(Summary description) To better meet the needs of cloud data centers, many data center network solutions emerge. For example, Huawei DATA center switches (CloudEngine series), Huawei DATA center controllers (iMaster NCE-Fabric), and Intelligent Network Analysis Platform (iMaster NCE-FabricInsight) provide the following two recommended data center interconnection solutions.

End-to-end VXLAN scheme

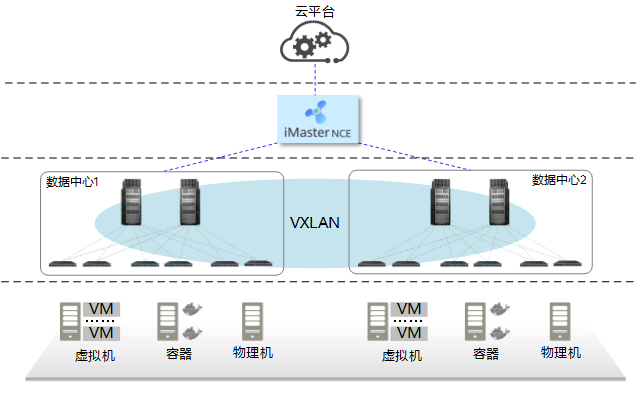

Data center interconnection based on an end-to-end VXLAN tunnel refers to the following: The computing and network of multiple DCS are unified resource pools and centrally managed by one Cloud platform and one iMaster NCE-Fabric. Multiple DCS are unified end-to-end VXLAN domains, and users' Virtual Private Cloud (VPC). Virtual private cloud (VSP) and subnet can be deployed across DCS to directly realize service communication. Its deployment architecture is 25G SFP28 Duplex as shown in the following figure.

Schematic diagram of the end-to-end VXLAN solution

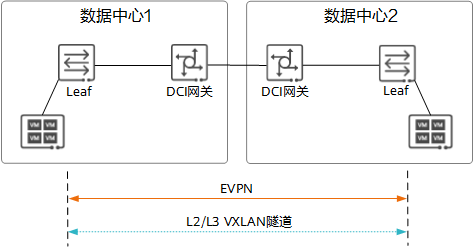

In this solution, an end-to-end VXLAN tunnel must be established between multiple data centers. As shown in the following figure, Underlay routes must be interconnected between data centers. Secondly, in Overlay network layer, EVPN should be deployed between Leaf devices in two data centers. In this way, Leaf devices at both ends discover each other through EVPN and transfer VXLAN encapsulation information to each other through EVPN routes, triggering the establishment of end-to-end VXLAN tunnels.

The end-to-end VXLAN tunnel diagram

This scheme is mainly used to match the Muti-POD scenario. Point of Delivery (PoD) refers to a group of relatively independent physical resources. Multi-pod refers to an iMaster NCE-Fabric that manages multiple PODS. Multiple pods form an end-to-end VXLAN domain. This scenario applies to the interconnection of small data centers that are close to the same city.

Segment VXLAN scheme

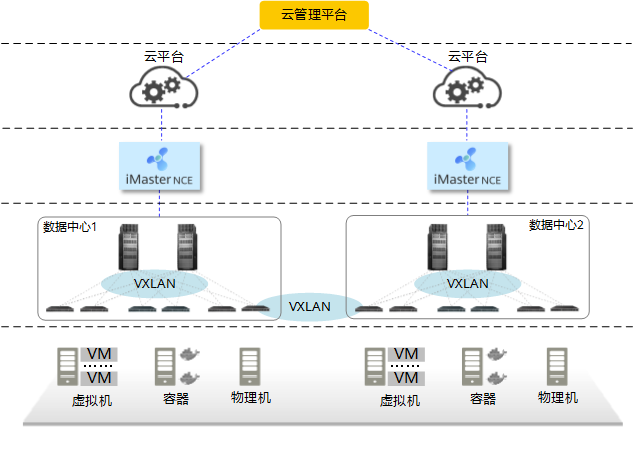

Data center interconnection based on Segment VXLAN tunnel refers to: In the multi-DC scenario, the computing and network of each DC are independent resource pools, which are independently managed by their respective cloud platforms and iMaster NCE-Fabric. Each DC is an independent VXLAN domain. A DCI VXLAN domain is required for communication between DCS. In addition, users' VPCS and subnets are deployed in their own data centers. Therefore, service communication between different data centers needs to be orchestrated by upper-layer cloud management platforms. The following figure shows the deployment architecture.

Segment VXLAN scheme architecture diagram

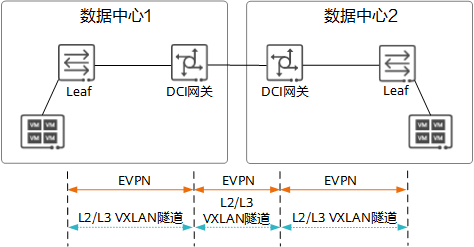

In this solution, VXLAN tunnels must be established within and between data centers. As shown in the following figure, Underlay routes must be interconnected between data centers. Second, at the Overlay network level, EVPNs are deployed between Leaf devices inside the data center and DCI gateways, as well as between DCI gateways in different data centers. In this way, related devices discover each other through EVPN protocol and transmit VXLAN encapsulation information to each other through EVPN route, thereby triggering the establishment of Segment VXLAN tunnels.

Segment VXLAN scheme architecture diagram

This solution is mainly used to match multi-site scenarios, which are applicable to the interconnection of multiple data centers located in different regions, or the interconnection of multiple data centers that are too far apart to be managed by the same iMaster NCE-Fabric.

- Categories:Industry News

- Time of issue:2022-07-26

- Views:

To better meet the needs of cloud data centers, many data center network solutions emerge. For example, Huawei DATA center switches (CloudEngine series), Huawei DATA center controllers (iMaster NCE-Fabric), and Intelligent Network Analysis Platform (iMaster NCE-FabricInsight) provide the following two recommended data center interconnection solutions 25G SFP28 Duplex.

End-to-end VXLAN scheme

Data center interconnection based on an end-to-end VXLAN tunnel refers to the following: The computing and network of multiple DCS are unified resource pools and centrally managed by one Cloud platform and one iMaster NCE-Fabric. Multiple DCS are unified end-to-end VXLAN domains, and users' Virtual Private Cloud (VPC). Virtual private cloud (VSP) and subnet can be deployed across DCS to directly realize service communication. Its deployment architecture is 25G SFP28 Duplex as shown in the following figure 25G SFP28 Duplex.

Schematic diagram of the end-to-end VXLAN solution

In this solution, an end-to-end VXLAN tunnel must be established between multiple data centers. As shown in the following figure, Underlay routes must be interconnected between data centers. Secondly, in Overlay network layer, EVPN should be deployed between Leaf devices in two data centers. In this way, Leaf devices at both ends discover each other through EVPN and transfer VXLAN encapsulation information to each other through EVPN routes, triggering the establishment of end-to-end VXLAN tunnels 25G SFP28 Duplex.

The end-to-end VXLAN tunnel diagram

This scheme is mainly used to match the Muti-POD scenario. Point of Delivery (PoD) refers to a group of relatively independent physical resources. Multi-pod refers to an iMaster NCE-Fabric that manages multiple PODS. Multiple pods form an end-to-end VXLAN domain. This scenario applies to the interconnection of small data centers that are close to the same city 25G SFP28 Duplex.

Segment VXLAN scheme

Data center interconnection based on Segment VXLAN tunnel refers to: In the multi-DC scenario, the computing and network of each DC are independent resource pools, which are independently managed by their respective cloud platforms and iMaster NCE-Fabric. Each DC is an independent VXLAN domain. A DCI VXLAN domain is required for communication between DCS. In addition, users' VPCS and subnets are deployed in their own data centers. Therefore, service communication between different data centers needs to be orchestrated by upper-layer cloud management platforms. The following figure shows the deployment architecture 25G SFP28 Duplex.

Segment VXLAN scheme architecture diagram

In this solution, VXLAN tunnels must be established within and between data centers. As shown in the following figure, Underlay routes must be interconnected between data centers. Second, at the Overlay network level, EVPNs are deployed between Leaf devices inside the data center and DCI gateways, as well as between DCI gateways in different data centers. In this way, related devices discover each other through EVPN protocol and transmit VXLAN encapsulation information to each other through EVPN route, thereby triggering the establishment of Segment VXLAN tunnels 25G SFP28 Duplex.

Segment VXLAN scheme architecture diagram

This solution is mainly used to match multi-site scenarios, which are applicable to the interconnection of multiple data centers located in different regions, or the interconnection of multiple data centers that are too far apart to be managed by the same iMaster NCE-Fabric 25G SFP28 Duplex.

Scan the QR code to read on your phone

Related

-

New products | Sunstar announced: 50G PON three-mode combo OLT miniaturized optical device for SFP and QSFP packages

With the popularization of gigabit broadband, 10G PON has entered the stage of large-scale deployment. At the same time, the industry is also laying out 50G PON to prepare for the 10-gigabit era. Compared with 10G PON, the 50G PON standard provides five times more access bandwidth and better service support capabilities (large bandwidth, low latency, and high reliability). At the same time, for operators, the biggest problem facing the commercial use of 50G PON is the problem of multi-generation coexistence. ITU-T standards provide different options for the differentiated deployment of global operators. GPON region, G.9804.1 Amd2 and G.9805 offer 2 classes /5 options; EPON area, provides 2 types /4 options. From this point of view, multi-generation coexistence is an inevitable choice in the continuous evolution of the next generation PON. Sunstar Communication Technology Co.,Ltd.released 50G PON three-mode Combo OLT miniaturized optical device, which is MPM (built-in combined wave) 3-generation wave division mode coexistence, that is, G/XG(S)/50G three-mode MPM. The advantage of this solution is that the traditional gateway devices can be reused without changing or upgrading the user side, and the upgrade process can be optimized, equipment occupation and equipment room space can be saved, and energy consumption can be reduced. This three-mode Combo OLT miniaturized optical device uses a novel optical path design and a miniaturized TO-CAN package solution, and uses the technology accumulation and quality control of Sunstar Company in the field of coaxial packaging for many years, combining precision manufacturing, multi-wavelength spectrographic design and various types of TO-CAN package technology perfectly together. The 50G PON three-mode Combo OLT compact optical device of Sunstar Company is characterized by small size, high coupling efficiency, high structural reliability and strong manufacturability in mass production. The most critical is that its optimized optical path design and special packaging process ensure the upstream three-wavelength splitting, especially the isolation index of 50G PON upstream wavelength and GPON upstream wavelength, that is, the isolation degree of 1286±2nm and 1310±20nm edge wavelength; And take into account the high coupling efficiency of the downgoing three-way emitting laser to ensure the best output optical power index. This optical device is fully suitable for SFP and QSFP module packages, helping the access network to smoothly evolve to 50G PON. The 49th Optical Networking and Communications Symposium and Expo (OFC 2024) will be held from March 26 to 28, 2024 at the San Diego Convention Center, California, USA. The company will bring 10G PON OLT, 25G PON OLT, 50G PON OLT three-mode, 400G ER4 TOSA & ROSA, 800G DR8 optical module and a full range of AOC optical module solutions to the exhibition, welcome to visit the #3841 booth. About Sunstar Founded in 2001, Sunstar Communication Technology Co.,Ltd. focuses on the design, development, manufacturing, sales and technical support services of Optical Transceiver (OSA). After 20 years of technology accumulation and development, the formation of optical path, mechanical structure, high frequency simulation, thermal simulation, circuit, FPC soft board, IT software automation and other core technology design platform, and with precision machining, passive components, SMT, TO-CAN, OSA optical devices, COB, BOX, optical module the whole industry chain production and manufacturing capabilities. The company operates in China, North America, Europe, and Southeast Asia, and is also a subsidiary of the world's leading provider of optical fiber, cable and integrated solutions,YOFC Optical Fiber and Cable Co., LTD. - OFC2024 | Sunstar sincerely invites you to visit #3841 for negotiation and guidance 03-22

- Sunstar Combo 50G & XGS & GPON OLT QSFP-DD light module won the 2023 ICC "Excellent Technology Award" 01-10

- Tel:86-(0)28-87988088

- Fax:86-(0)28-87988568

- Address:4F.,Blog.D1,Mould lndustrial Park West High-tech Zone,Chengdu,Sichuan,P.R.C

Sunstar Communication Technology Co.,Ltd

Copyright © 2020 Sunstar Communication Technology Co.,Ltd All Rights Reserved 蜀ICP备19023203号-1

Scan code

Scan code